我有个朋友呢,特爱看电子书,但是他要求还挺多,非完本不看,评分低不看,完本太久远也不看,等等。

某一天,我突然心血来潮,然后他找到我,给我一个电子书网站,让我能不能把一些数据拿到,我乍眼一看,哎呦,这不是练习requests,像我天天忙成狗的,直接就答应了,主要是他请我和果茶。

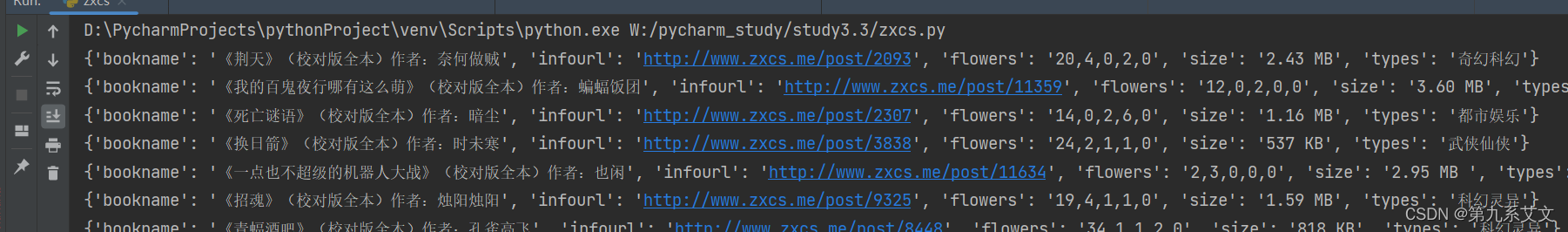

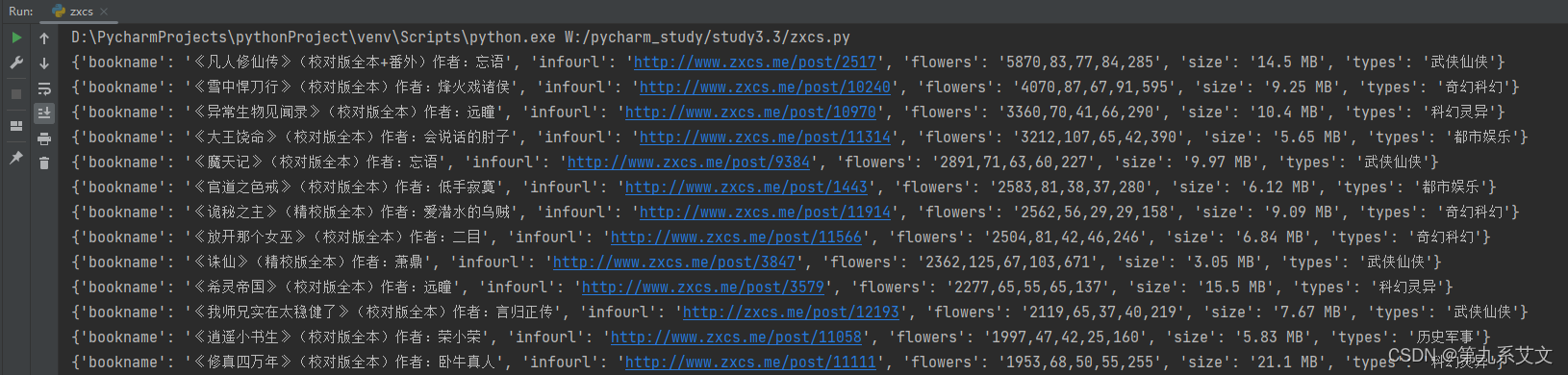

先看看结果,这个是按照仙草排行

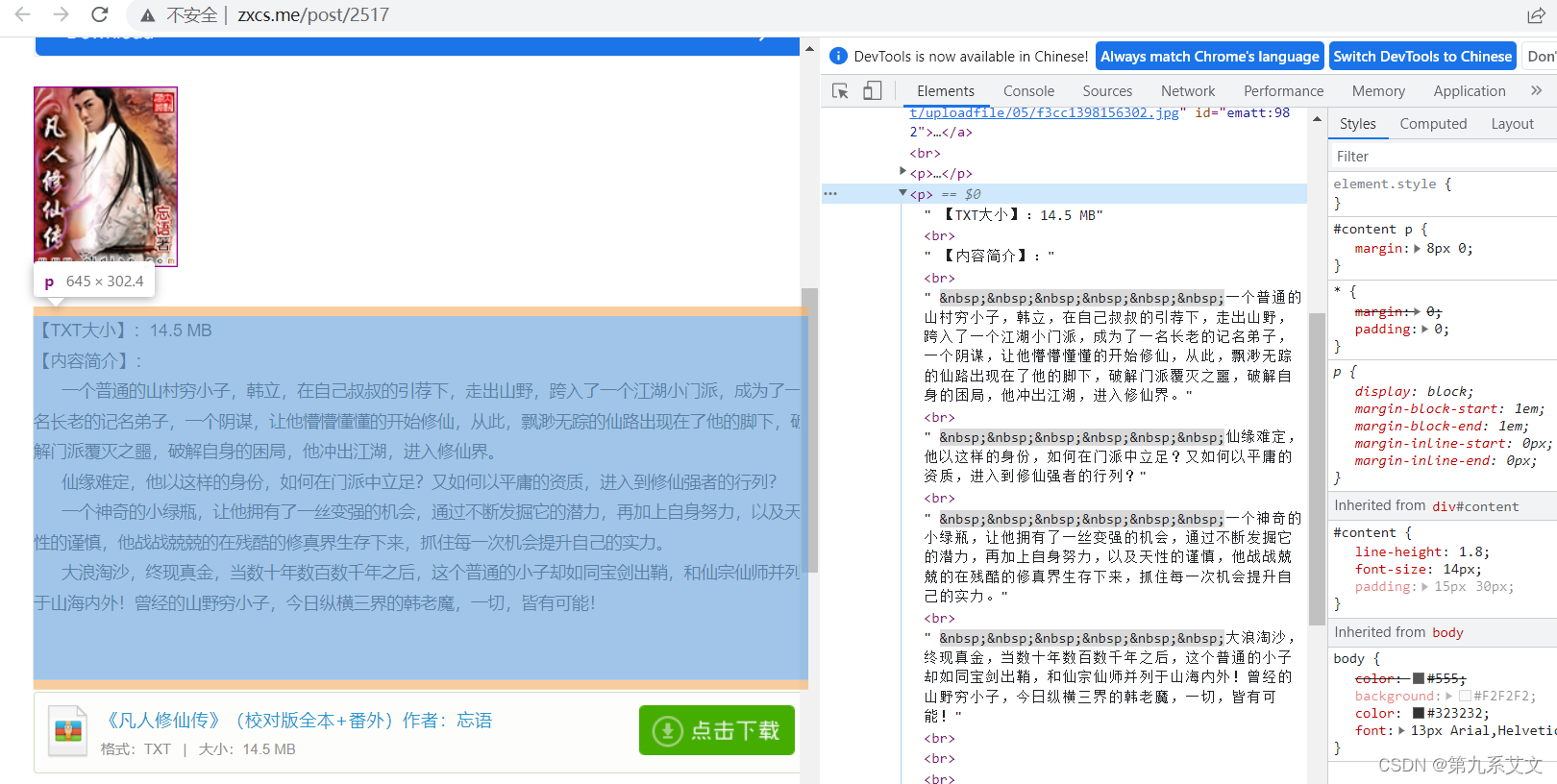

分析

其实,需要的内容也不多,就下面几项

- 电子书名称

- 电子书作者

- 电子书大小

- 电子书仙草毒草

- 电子书简介

- 电子书类型

网页分析,不得不说Python下一个很好用的模块 bs4 ,分析之后,就可以保存数据了,本次保存为sqlite文件,方便操作

实战

python">class Sql_Utils:

def __init__(self):

self.filepath = "./book.data"

self.get_conn()

self.check_init()

def get_conn(self):

try:

self.conn = sqlite3.connect(self.filepath)

except Exception as e:

self.conn = None

print(e)

return self.conn

def check_init(self):

conn = self.conn

if conn:

c = conn.cursor()

try:

cursor = c.execute('''

select * from bookinfos limit 10;

''')

# for row in cursor:

# print(row)

except Exception as e:

print(e)

self.__init_database()

finally:

conn.close()

else:

print("Opened database failed")

def __init_database(self):

conn = self.conn

c = conn.cursor()

c.execute('''CREATE TABLE bookinfos

(ID INTEGER PRIMARY KEY NOT NULL,

BOOKID INT NOT NULL UNIQUE,

NAME CHAR(200) NOT NULL UNIQUE,

INFOURL CHAR(300) NOT NULL,

DOWNLOADURL CHAR(300) NOT NULL,

FLOWERS CHAR(300) NOT NULL,

DESCRIPTION CHAR(1000) NOT NULL,

SIZE CHAR(30) NOT NULL,

TYPES CHAR(30) NOT NULL);''')

conn.commit()

conn.close()

def insert(self, *args, **kwargs):

bookid = args[0]

books = self.query(bookid)

if len(books) == 0:

if len(kwargs) == 7:

bookname = kwargs.get("bookname")

infourl = kwargs.get("infourl")

downloadurl = kwargs.get("downloadurl")

size = kwargs.get("size")

types = kwargs.get("types")

description = kwargs.get("description")

flowers = kwargs.get("flowers")

else:

raise KeyError

else:

bookname = kwargs.get("bookname", books[0].get("bookname"))

infourl = kwargs.get("infourl", books[0].get("infourl"))

downloadurl = kwargs.get("downloadurl", books[0].get("downloadurl"))

size = kwargs.get("size", books[0].get("size"))

types = kwargs.get("types", books[0].get("types"))

description = kwargs.get("description", books[0].get("description"))

flowers = kwargs.get("flowers", books[0].get("flowers"))

conn = self.get_conn()

c = conn.cursor()

try:

c.execute("""INSERT INTO bookinfos(BOOKID,NAME,INFOURL,DOWNLOADURL,FLOWERS,DESCRIPTION,SIZE,TYPES)

VALUES(?,?,?,?,?,?,?,?)""", (bookid, bookname, infourl, downloadurl, flowers, description, size, types))

conn.commit()

print("%s 插入成功" % bookname)

except Exception as e:

print("%s 插入失败 %s" % (bookname, e))

finally:

conn.close()

def query(self, bookid):

self.get_conn()

conn = self.conn

c = conn.cursor()

books = []

try:

if bookid == -1:

cursor = c.execute("""select * from bookinfos""")

else:

cursor = c.execute("""select * from bookinfos where BOOKID =?""", (bookid,))

for row in cursor:

books.append({

# "bookid": row[1],

"bookname": row[2],

"infourl": row[3],

# "downloadurl": row[4],

"flowers": row[5],

# "description":row[6],

"size": row[7],

"types": row[8],

})

except Exception as e:

print("查询异常 %s" % e)

finally:

conn.close()

return books

数据库操作对象已经完成了,接下来就是页面分析查找有用数据了

通过浏览器f12进入开发者模式

1.获取电子书下载地址

python">def get_book_download_url(bookid, headers):

baseurl = "http://zxcs.me/post/%s" % bookid

req = requests.get(baseurl, headers=headers)

if req.status_code == 200:

html_body = req.content

soup = BeautifulSoup(html_body, "html.parser")

for a in soup.find_all('a'):

if "点击下载" in a.get("title", ""):

endpagurl = a.get("href")

return endpagurl

else:

print("url: %s ERROR" % baseurl)

return "ERROR"

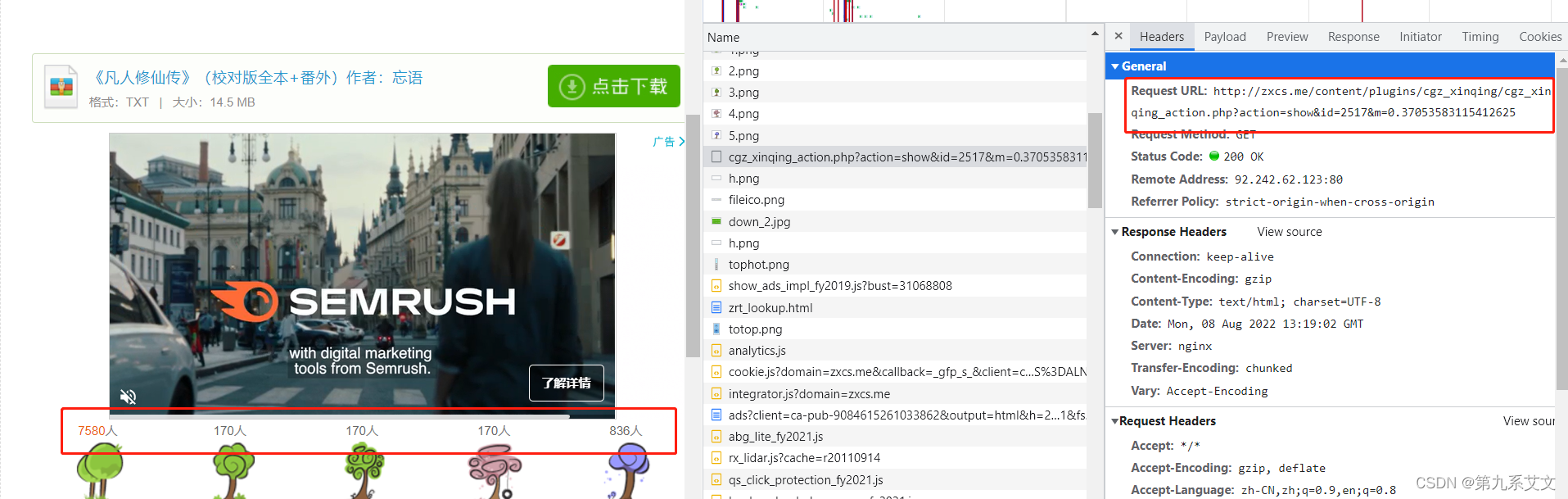

2.获取电子书仙草毒草数据信息,该数据无需分析页面,可以直接通过请求拿到

python">def get_book_flowers(bookid, get_flower_tep, headers):

flower_url = get_flower_tep % (bookid, random.random())

req = requests.get(flower_url, headers=headers)

return req.text3.获取电子书大小和简介

python">def get_book_infos(bookurl, headers, bookname):

req = requests.get(bookurl, headers=headers)

if req.status_code == 200:

html_body = req.content

soup = BeautifulSoup(html_body, "html.parser")

sinfo = []

for a in soup.find_all('p'):

if "内容简介" in str(a):

for i in a:

si = str(i)

if "link" in si:

break

if "<br/>" in si or "\n" == si:

continue

sinfo.append(str(i).strip("\r\n").strip("\xa0").strip("\t").strip("\u3000"))

try:

return sinfo[0].split(":")[1], "".join(sinfo[2:])

except Exception as e:

print("ERROR %s 大小和描述获取失败 %s" % (bookname, e))

return "获取失败", "获取失败"

4,根据连接获取信息并存储数据库

python">def get_this_page_url(baseurl, headers, types):

xurl = []

req = requests.get(baseurl, headers=headers)

if req.status_code == 200:

html_body = req.content

soup = BeautifulSoup(html_body, "html.parser")

# print(soup.prettify())

count = 0

for a in soup.find_all('a'):

# print(a)

if a.parent.name in "dt":

# print({"%s"%a.string:a.get("href")})

xurl.append({int(a.get("href").split("/")[-1]): {"%s" % a.string: a.get("href")}})

sql_conn = Sql_Utils()

infourl = a.get("href")

bookid = infourl.split("/")[-1]

books = sql_conn.query(bookid)

bookname = a.string

if len(books) != 0:

print("%s 已经存在,继续操作" % bookname)

continue

downloadurl = get_book_download_url(bookid, headers)

flowers = get_book_flowers(bookid, get_flower_tep, headers)

size, description = get_book_infos(infourl, headers, bookname)

sql_conn.insert(bookid, bookname=bookname, infourl=infourl,

flowers=flowers, downloadurl=downloadurl, size=size,

description=description, types=types)

return xurl

def get_this_all_url(baseurl, headers, types):

endurl = get_this_end_url(baseurl, headers)

endnumlist = endurl.split("/")

all_url = []

for page in range(1, int(endnumlist[-1]) + 1):

endnumlist[-1] = str(page)

print("*" * 200)

print("%s 总共%s页,现在进行到%s页" % (types, endurl.split("/")[-1], page))

this_page_url = get_this_page_url("/".join(endnumlist), headers, types)

# print("page:%s this_page_url:%s"%(page,this_page_url))

all_url.extend(this_page_url)

return all_url接下来,就整理一下剩余代码,如下

python">import random

import sqlite3

import requests

from bs4 import BeautifulSoup

zxcs_urls = {

"奇幻科幻": "http://zxcs.me/sort/26",

"都市娱乐": "http://zxcs.me/sort/23",

"武侠仙侠": "http://zxcs.me/sort/25",

"科幻灵异": "http://zxcs.me/sort/27",

"历史军事": "http://zxcs.me/sort/28",

"竞技游戏": "http://zxcs.me/sort/29",

"二次元": "http://zxcs.me/sort/55",

}

headers = {'User-Agent': 'Mozilla/8.0 (iPhone; CPU iPhone OS 16_0 like Mac OS X) AppleWebKit'}

get_flower_tep = "http://zxcs.me/content/plugins/cgz_xinqing/cgz_xinqing_action.php?action=show&id=%s&m=%s"

def make_book_data():

for types, baseurl in zxcs_urls.items():

get_this_all_url(baseurl, headers, types)

def get_book_l():

sql_u = Sql_Utils()

booklist = sql_u.query(-1)

# 仙草排行

booklist.sort(key=lambda x: int(x.get("flowers").split(",")[0]), reverse=True)

# 毒草排行

# booklist.sort(key=lambda x:int(x.get("flowers").split(",")[-1]),reverse=True)

# 仙草百分比排行

# booklist.sort(key=lambda x:float(int(x.get("flowers").split(",")[0])*10/(int(x.get("flowers").split(",")[-1])+int(x.get("flowers").split(",")[0]))),reverse=True)

for book in booklist:

if book.get("types") == "竞技游戏":

continue

print(book)

if __name__ == '__main__':

'''

先运行 make_book_data()

等这个运行之后,就可以查询啦

'''

make_book_data()

get_book_l()

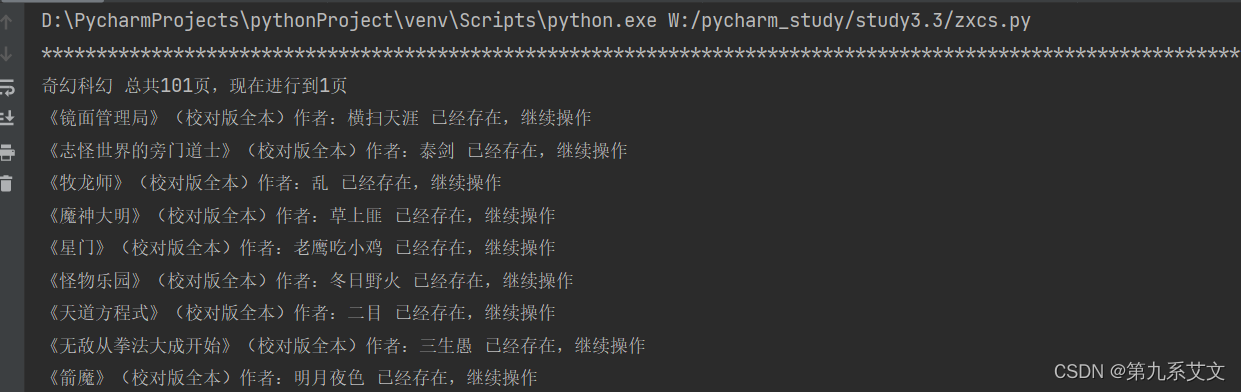

先运行make_book_data() ,将数据存储到数据库,结果如下

已经在数据库里面存在的,就不会再次存储了

通过 get_book_l() 查询到仙草毒草比例